Strong Causes To Keep away from Deepseek Chatgpt

페이지 정보

작성자 Connie 작성일25-03-17 08:20 조회3회 댓글0건관련링크

본문

I already laid out last fall how every facet of Meta’s business benefits from AI; a giant barrier to realizing that vision is the price of inference, which signifies that dramatically cheaper inference - and dramatically cheaper training, given the need for Meta to stay on the innovative - makes that vision way more achievable. AI business, and the advantages or not of open source for innovation. Using GroqCloud with Open WebUI is possible thanks to an OpenAI-suitable API that Groq supplies. Moreover, the technique was a easy one: as an alternative of attempting to judge step-by-step (course of supervision), or doing a search of all attainable solutions (a la AlphaGo), DeepSeek encouraged the model to strive several different solutions at a time and then graded them based on the 2 reward capabilities. Special due to those who assist make my writing potential and sustainable. OpenAI doesn't have some sort of particular sauce that can’t be replicated.

I already laid out last fall how every facet of Meta’s business benefits from AI; a giant barrier to realizing that vision is the price of inference, which signifies that dramatically cheaper inference - and dramatically cheaper training, given the need for Meta to stay on the innovative - makes that vision way more achievable. AI business, and the advantages or not of open source for innovation. Using GroqCloud with Open WebUI is possible thanks to an OpenAI-suitable API that Groq supplies. Moreover, the technique was a easy one: as an alternative of attempting to judge step-by-step (course of supervision), or doing a search of all attainable solutions (a la AlphaGo), DeepSeek encouraged the model to strive several different solutions at a time and then graded them based on the 2 reward capabilities. Special due to those who assist make my writing potential and sustainable. OpenAI doesn't have some sort of particular sauce that can’t be replicated.

Because some controversial circumstances that drew public criticism for their low punishments have been withdrawn from China Judgments Online, there are considerations about whether AI based mostly on fragmented judicial knowledge can attain unbiased selections. I asked why the inventory prices are down; you just painted a optimistic picture! My picture is of the long term; right this moment is the quick run, and it appears likely the market is working via the shock of R1’s existence. This famously ended up working better than other extra human-guided techniques. During this part, DeepSeek-R1-Zero learns to allocate more pondering time to a problem by reevaluating its initial strategy. A very intriguing phenomenon noticed in the course of the training of DeepSeek-R1-Zero is the occurrence of an "aha moment". This second isn't solely an "aha moment" for the model but additionally for the researchers observing its habits. It underscores the power and sweetness of reinforcement studying: rather than explicitly teaching the model on how to unravel an issue, we merely present it with the appropriate incentives, and it autonomously develops advanced drawback-solving methods. DeepSeek gave the model a set of math, code, and logic questions, and set two reward features: one for the best reply, and one for the appropriate format that utilized a pondering process.

It has the flexibility to think by an issue, producing much greater quality outcomes, notably in areas like coding, math, and logic (however I repeat myself). R1 is a reasoning model like OpenAI’s o1. During coaching, DeepSeek-R1-Zero naturally emerged with quite a few powerful and interesting reasoning behaviors. Following this, we carry out reasoning-oriented RL like DeepSeek-R1-Zero. This, by extension, most likely has everyone nervous about Nvidia, which clearly has a giant impact in the marketplace. In the long run, DeepSeek could grow to be a big participant within the evolution of search technology, especially as AI and privateness issues continue to shape the digital panorama. People who want to use Free DeepSeek Ai Chat for more superior duties and use APIs with this platform for coding duties in the backend, then one should pay. That is one of the vital powerful affirmations but of The Bitter Lesson: you don’t need to teach the AI the best way to purpose, you can simply give it enough compute and data and it'll train itself! Think of it like studying by instance-fairly than counting on huge information centers or uncooked computing power, DeepSeek mimics the answers an knowledgeable would give in areas like astrophysics, Shakespeare, and Python coding, but in a much lighter means.

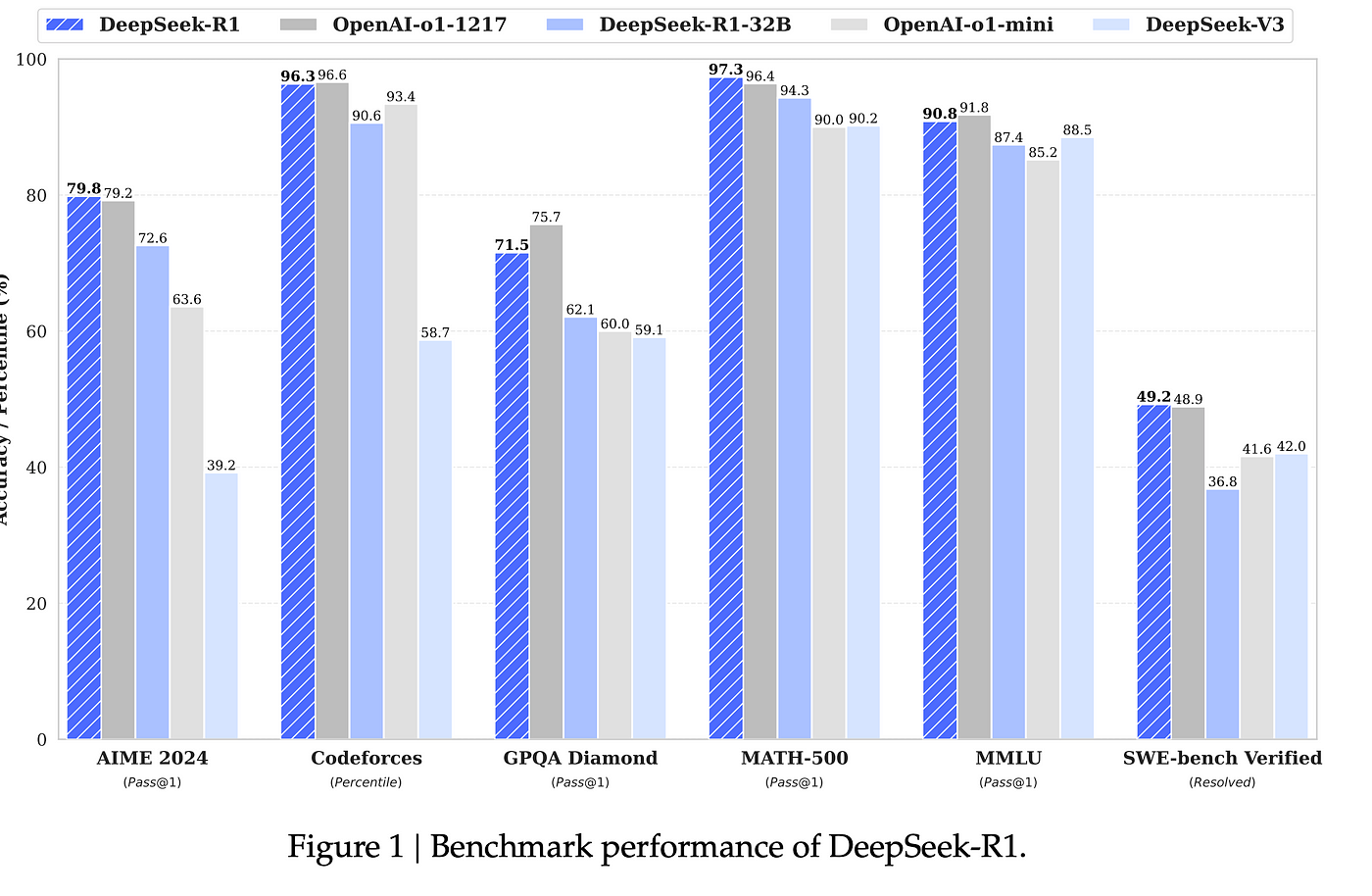

Another cause DeepSeek is shaking up the AI business - its language learning mannequin requires far much less resources to operate. Specifically, we start by amassing thousands of cold-start knowledge to tremendous-tune the DeepSeek-V3-Base mannequin. After thousands of RL steps, DeepSeek-R1-Zero exhibits super efficiency on reasoning benchmarks. However, DeepSeek-R1-Zero encounters challenges corresponding to poor readability, and language mixing. The Qwen-Vl sequence is a line of visual language fashions that combines a imaginative and prescient transformer with a LLM. In this paper, we take step one toward improving language model reasoning capabilities utilizing pure reinforcement studying (RL). This sounds quite a bit like what OpenAI did for o1: DeepSeek started the mannequin out with a bunch of examples of chain-of-thought considering so it may learn the right format for human consumption, and then did the reinforcement studying to boost its reasoning, along with a lot of enhancing and refinement steps; the output is a model that seems to be very competitive with o1.

Another cause DeepSeek is shaking up the AI business - its language learning mannequin requires far much less resources to operate. Specifically, we start by amassing thousands of cold-start knowledge to tremendous-tune the DeepSeek-V3-Base mannequin. After thousands of RL steps, DeepSeek-R1-Zero exhibits super efficiency on reasoning benchmarks. However, DeepSeek-R1-Zero encounters challenges corresponding to poor readability, and language mixing. The Qwen-Vl sequence is a line of visual language fashions that combines a imaginative and prescient transformer with a LLM. In this paper, we take step one toward improving language model reasoning capabilities utilizing pure reinforcement studying (RL). This sounds quite a bit like what OpenAI did for o1: DeepSeek started the mannequin out with a bunch of examples of chain-of-thought considering so it may learn the right format for human consumption, and then did the reinforcement studying to boost its reasoning, along with a lot of enhancing and refinement steps; the output is a model that seems to be very competitive with o1.

If you adored this article so you would like to get more info about DeepSeek Chat kindly visit our own web site.

댓글목록

등록된 댓글이 없습니다.