Fraud, Deceptions, And Downright Lies About Deepseek Exposed

페이지 정보

작성자 Demetrius 작성일25-03-18 05:40 조회2회 댓글0건관련링크

본문

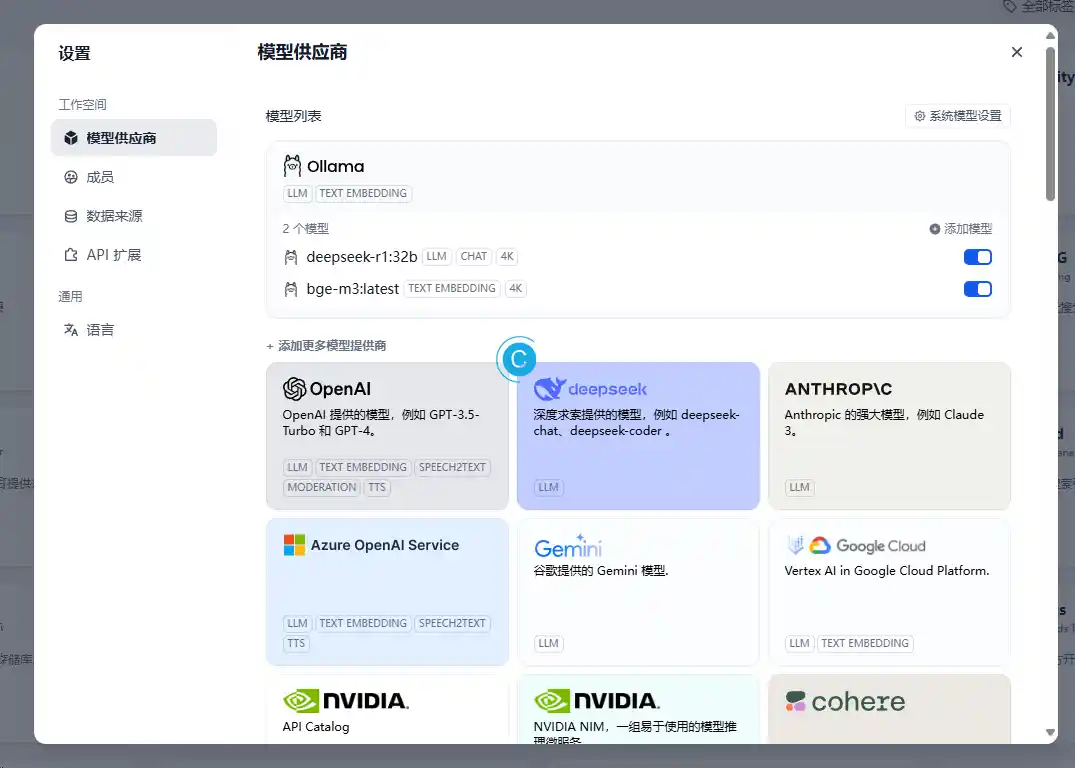

However, prior to this work, FP8 was seen as environment friendly however much less efficient; DeepSeek demonstrated how it can be utilized effectively. LLM: Support DeepSeek v3-V3 mannequin with FP8 and BF16 modes for tensor parallelism and pipeline parallelism. "As for the training framework, we design the DualPipe algorithm for environment friendly pipeline parallelism, which has fewer pipeline bubbles and hides most of the communication during coaching by means of computation-communication overlap. This overlap ensures that, as the model additional scales up, so long as we maintain a relentless computation-to-communication ratio, we can nonetheless employ superb-grained specialists throughout nodes while attaining a near-zero all-to-all communication overhead." The constant computation-to-communication ratio and near-zero all-to-all communication overhead is placing relative to "normal" ways to scale distributed training which sometimes just means "add more hardware to the pile". However, GRPO takes a guidelines-primarily based rules approach which, while it is going to work better for problems which have an goal reply - similar to coding and math - it'd wrestle in domains where solutions are subjective or variable. Despite going through restricted entry to slicing-edge Nvidia GPUs, Chinese AI labs have been ready to supply world-class fashions, illustrating the significance of algorithmic innovation in overcoming hardware limitations. Although DeepSeek has demonstrated exceptional efficiency in its operations, accessing more superior computational assets may speed up its progress and improve its competitiveness in opposition to companies with larger computational capabilities.

However, prior to this work, FP8 was seen as environment friendly however much less efficient; DeepSeek demonstrated how it can be utilized effectively. LLM: Support DeepSeek v3-V3 mannequin with FP8 and BF16 modes for tensor parallelism and pipeline parallelism. "As for the training framework, we design the DualPipe algorithm for environment friendly pipeline parallelism, which has fewer pipeline bubbles and hides most of the communication during coaching by means of computation-communication overlap. This overlap ensures that, as the model additional scales up, so long as we maintain a relentless computation-to-communication ratio, we can nonetheless employ superb-grained specialists throughout nodes while attaining a near-zero all-to-all communication overhead." The constant computation-to-communication ratio and near-zero all-to-all communication overhead is placing relative to "normal" ways to scale distributed training which sometimes just means "add more hardware to the pile". However, GRPO takes a guidelines-primarily based rules approach which, while it is going to work better for problems which have an goal reply - similar to coding and math - it'd wrestle in domains where solutions are subjective or variable. Despite going through restricted entry to slicing-edge Nvidia GPUs, Chinese AI labs have been ready to supply world-class fashions, illustrating the significance of algorithmic innovation in overcoming hardware limitations. Although DeepSeek has demonstrated exceptional efficiency in its operations, accessing more superior computational assets may speed up its progress and improve its competitiveness in opposition to companies with larger computational capabilities.

While the base models are nonetheless very massive and require information-heart-class hardware to function, lots of the smaller fashions can be run on rather more modest hardware. The time spent memorizing all of the characters essential to be literate, so the speculation went, not only put China at a profound aggressive disadvantage with nations that employed far more environment friendly alphabets, but was additionally physically and mentally unhealthy! Will probably be interesting to track the commerce-offs as more individuals use it in several contexts. R1’s greatest weakness appeared to be its English proficiency, but it nonetheless carried out higher than others in areas like discrete reasoning and dealing with long contexts. Over 2 million posts in February alone have mentioned "DeepSeek fortune-telling" on WeChat, China’s greatest social platform, based on WeChat Index, a software the corporate released to observe its trending keywords. 1.6 million. That's what number of instances the DeepSeek mobile app had been downloaded as of Saturday, Bloomberg reported, the No. 1 app in iPhone stores in Australia, Canada, China, Singapore, the US and deepseek français the U.K.

The DeepSeek startup is lower than two years old-it was based in 2023 by 40-yr-previous Chinese entrepreneur Liang Wenfeng-and launched its open-source fashions for obtain within the United States in early January, where it has since surged to the top of the iPhone download charts, surpassing the app for OpenAI’s ChatGPT. Lawmakers in Congress last yr on an overwhelmingly bipartisan basis voted to drive the Chinese mother or father company of the popular video-sharing app TikTok to divest or face a nationwide ban though the app has since obtained a 75-day reprieve from President Donald Trump, who's hoping to work out a sale. Monday following a selloff spurred by DeepSeek's success, and the tech-heavy Nasdaq was down 3.5% on the solution to its third-worst day of the last two years. It analyzes the balance of wood, fire, earth, metal, and water in a person’s chart to predict profession success, relationships, and financial fortune.

A reasoning model, on the other hand, analyzes the issue, identifies the appropriate guidelines, applies them, and reaches the proper answer-regardless of how the query is worded or whether or not it has seen an analogous one before. By using GRPO to use the reward to the mannequin, DeepSeek avoids using a big "critic" mannequin; this once more saves reminiscence. According to this post, while earlier multi-head consideration strategies have been considered a tradeoff, insofar as you scale back model quality to get better scale in large model training, DeepSeek says that MLA not solely permits scale, it additionally improves the model. This fastened attention span, means we can implement a rolling buffer cache. This raises some questions about simply what precisely "literacy" means in a digital context. Despite the questions remaining concerning the true value and course of to construct DeepSeek’s products, they still despatched the inventory market right into a panic: Microsoft (down 3.7% as of 11:30 a.m. First, using a course of reward mannequin (PRM) to guide reinforcement studying was untenable at scale.

A reasoning model, on the other hand, analyzes the issue, identifies the appropriate guidelines, applies them, and reaches the proper answer-regardless of how the query is worded or whether or not it has seen an analogous one before. By using GRPO to use the reward to the mannequin, DeepSeek avoids using a big "critic" mannequin; this once more saves reminiscence. According to this post, while earlier multi-head consideration strategies have been considered a tradeoff, insofar as you scale back model quality to get better scale in large model training, DeepSeek says that MLA not solely permits scale, it additionally improves the model. This fastened attention span, means we can implement a rolling buffer cache. This raises some questions about simply what precisely "literacy" means in a digital context. Despite the questions remaining concerning the true value and course of to construct DeepSeek’s products, they still despatched the inventory market right into a panic: Microsoft (down 3.7% as of 11:30 a.m. First, using a course of reward mannequin (PRM) to guide reinforcement studying was untenable at scale.

If you loved this short article and also you want to be given more info about Free DeepSeek v3 i implore you to pay a visit to our internet site.

댓글목록

등록된 댓글이 없습니다.