Discover A fast Way to Deepseek

페이지 정보

작성자 Selene 작성일25-02-13 11:35 조회3회 댓글0건관련링크

본문

DeepSeek has created an algorithm that allows an LLM to bootstrap itself by beginning with a small dataset of labeled theorem proofs and create increasingly greater quality example to superb-tune itself. Partly out of necessity and partly to more deeply understand LLM analysis, we created our own code completion evaluation harness referred to as CompChomper. We've reviewed contracts written using AI help that had multiple AI-induced errors: the AI emitted code that worked nicely for recognized patterns, however performed poorly on the actual, personalized situation it needed to handle. The lengthy-term research goal is to develop synthetic common intelligence to revolutionize the best way computers interact with humans and handle complex tasks. It is essential to fastidiously evaluation DeepSeek's privateness coverage to grasp how they handle person information. Some testers say it eclipses DeepSeek's capabilities. This smaller model approached the mathematical reasoning capabilities of GPT-four and outperformed another Chinese model, Qwen-72B. You may deploy the mannequin utilizing vLLM and invoke the model server.

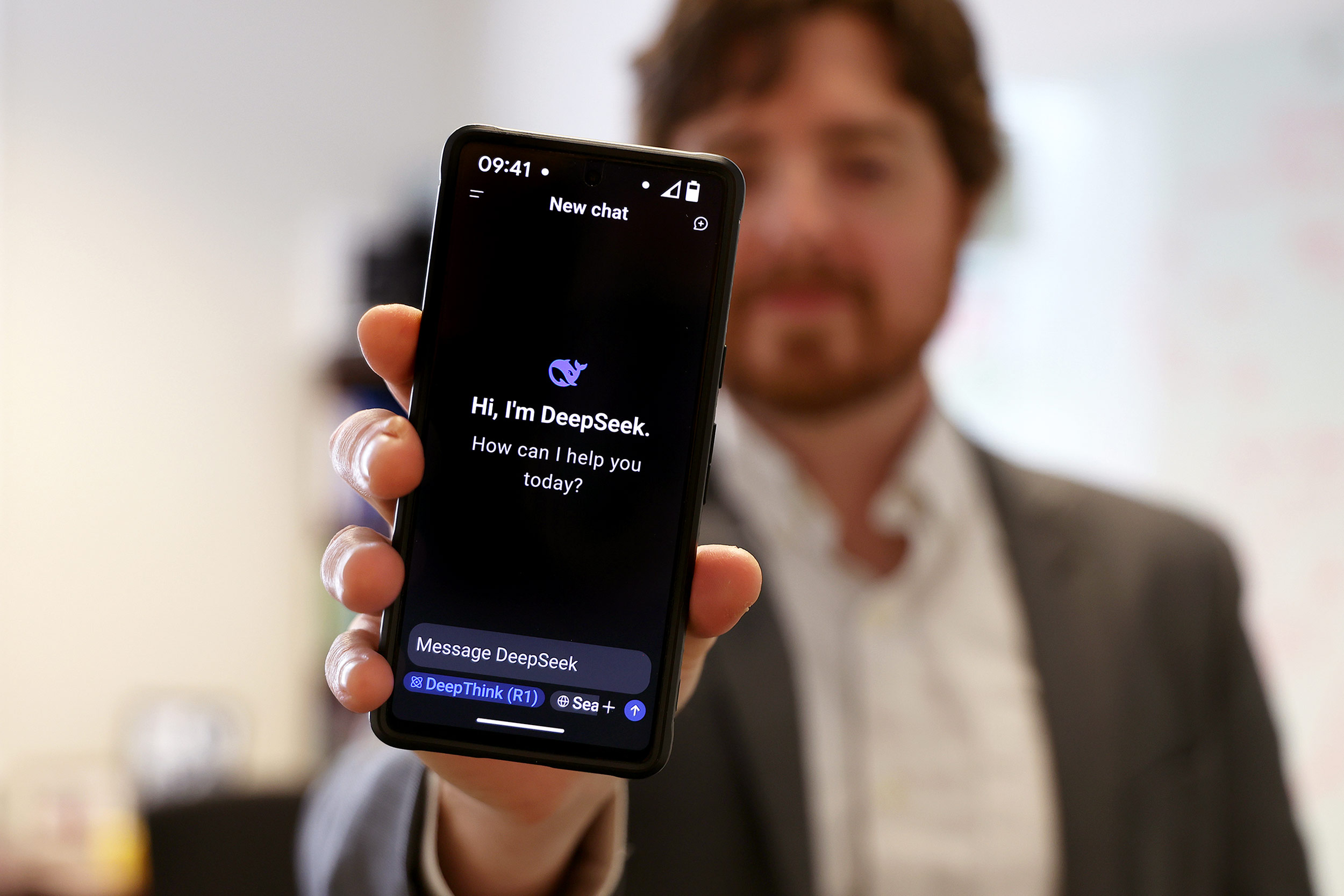

For Android: Open the Google Play Store, search for "DeepSeek," and hit "Install" to start using the app on your Android system. But the potential threat DeepSeek poses to nationwide security could also be more acute than beforehand feared because of a potential open door between DeepSeek and the Chinese authorities, in line with cybersecurity consultants. DeepSeek goals for more customization in its responses. By making the resources openly accessible, Hugging Face aims to democratize access to advanced AI model development strategies and encouraging group collaboration in AI analysis. This work additionally required an upstream contribution for Solidity assist to tree-sitter-wasm, to profit other development tools that use tree-sitter. Which model is greatest for Solidity code completion? Despite its lower training costs, the model delivers efficiency comparable to high-tier AI models. AppSOC's results replicate some points that have already emerged round DeepSeek since its release to much fanfare in January with claims of distinctive efficiency and efficiency although it was developed for lower than $6 million by a scrappy Chinese startup. On January 27, 2025, the worldwide AI landscape shifted dramatically with the launch of DeepSeek, a Chinese AI startup has rapidly emerged as a disruptive pressure in the trade.

For Android: Open the Google Play Store, search for "DeepSeek," and hit "Install" to start using the app on your Android system. But the potential threat DeepSeek poses to nationwide security could also be more acute than beforehand feared because of a potential open door between DeepSeek and the Chinese authorities, in line with cybersecurity consultants. DeepSeek goals for more customization in its responses. By making the resources openly accessible, Hugging Face aims to democratize access to advanced AI model development strategies and encouraging group collaboration in AI analysis. This work additionally required an upstream contribution for Solidity assist to tree-sitter-wasm, to profit other development tools that use tree-sitter. Which model is greatest for Solidity code completion? Despite its lower training costs, the model delivers efficiency comparable to high-tier AI models. AppSOC's results replicate some points that have already emerged round DeepSeek since its release to much fanfare in January with claims of distinctive efficiency and efficiency although it was developed for lower than $6 million by a scrappy Chinese startup. On January 27, 2025, the worldwide AI landscape shifted dramatically with the launch of DeepSeek, a Chinese AI startup has rapidly emerged as a disruptive pressure in the trade.

If we choose to compete we are able to nonetheless win, and, if we do, we will have a Chinese company to thank. With voice search adoption growing, DeepSeek will optimize content for natural language queries. What doesn’t get benchmarked doesn’t get attention, which signifies that Solidity is neglected on the subject of giant language code fashions. Unfortunately, these tools are sometimes dangerous at Solidity. It's as though we're explorers and we've discovered not simply new continents, however a hundred completely different planets, they mentioned. Because as our powers develop we can subject you to extra experiences than you've got ever had and you will dream and these goals can be new. But we can make you have experiences that approximate this. How will you find these new experiences? So with all the things I read about models, I figured if I may find a model with a really low quantity of parameters I could get one thing price utilizing, however the thing is low parameter count results in worse output. The 7B model's coaching concerned a batch dimension of 2304 and a learning charge of 4.2e-4 and the 67B mannequin was trained with a batch size of 4608 and a studying charge of 3.2e-4. We make use of a multi-step learning rate schedule in our coaching course of.

Although it is not clearly defined, the MTP model is commonly smaller in dimension in comparison with the primary model (the full dimension of the DeepSeek V3 mannequin on HuggingFace is 685B, with 671B from the primary model and 14B from the MTP module). Released in December 2023, this was the first model of the overall-function mannequin. However, earlier than we will improve, we must first measure. You specify which git repositories to use as a dataset and how much completion type you wish to measure. Although CompChomper has solely been tested in opposition to Solidity code, it is essentially language impartial and may be easily repurposed to measure completion accuracy of different programming languages. Solidity is current in approximately zero code analysis benchmarks (even MultiPL, which incorporates 22 languages, is lacking Solidity). We are open to including assist to other AI-enabled code assistants; please contact us to see what we are able to do. At first we began evaluating common small code models, however as new models stored showing we couldn’t resist including DeepSeek Coder V2 Light and Mistrals’ Codestral. One thing to take into consideration because the approach to constructing quality training to show people Chapel is that for the time being the most effective code generator for different programming languages is Deepseek Coder 2.1 which is freely available to use by folks.

If you enjoyed this article and you would certainly like to obtain more details concerning شات ديب سيك kindly see our own site.

댓글목록

등록된 댓글이 없습니다.