By no means Changing Deepseek Will Eventually Destroy You

페이지 정보

작성자 Chante Landseer 작성일25-02-16 16:03 조회2회 댓글0건관련링크

본문

DeepSeek is an emerging synthetic intelligence firm that has gained attention for its revolutionary AI fashions - most notably its open source reasoning mannequin that is often compared to ChatGPT. DeepSeek 2.5 has been evaluated against GPT, Claude, and Gemini amongst different models for its reasoning, arithmetic, language, and code era capabilities. 2024 has proven to be a stable year for AI code era. Many customers recognize the model’s capability to take care of context over longer conversations or code technology duties, which is essential for complex programming challenges. Users have noted that DeepSeek’s integration of chat and coding functionalities gives a novel advantage over fashions like Claude and Sonnet. Both of the baseline models purely use auxiliary losses to encourage load balance, and use the sigmoid gating function with prime-K affinity normalization. A100 processors," in response to the Financial Times, and it's clearly putting them to good use for the advantage of open supply AI researchers. Available now on Hugging Face, the mannequin presents users seamless access by way of net and API, and it seems to be the most advanced giant language mannequin (LLMs) currently out there within the open-source landscape, in keeping with observations and tests from third-social gathering researchers. The praise for DeepSeek-V2.5 follows a nonetheless ongoing controversy around HyperWrite’s Reflection 70B, which co-founder and CEO Matt Shumer claimed on September 5 was the "the world’s prime open-supply AI mannequin," in keeping with his inside benchmarks, solely to see those claims challenged by independent researchers and the wider AI analysis community, who have to this point failed to reproduce the acknowledged results.

DeepSeek is an emerging synthetic intelligence firm that has gained attention for its revolutionary AI fashions - most notably its open source reasoning mannequin that is often compared to ChatGPT. DeepSeek 2.5 has been evaluated against GPT, Claude, and Gemini amongst different models for its reasoning, arithmetic, language, and code era capabilities. 2024 has proven to be a stable year for AI code era. Many customers recognize the model’s capability to take care of context over longer conversations or code technology duties, which is essential for complex programming challenges. Users have noted that DeepSeek’s integration of chat and coding functionalities gives a novel advantage over fashions like Claude and Sonnet. Both of the baseline models purely use auxiliary losses to encourage load balance, and use the sigmoid gating function with prime-K affinity normalization. A100 processors," in response to the Financial Times, and it's clearly putting them to good use for the advantage of open supply AI researchers. Available now on Hugging Face, the mannequin presents users seamless access by way of net and API, and it seems to be the most advanced giant language mannequin (LLMs) currently out there within the open-source landscape, in keeping with observations and tests from third-social gathering researchers. The praise for DeepSeek-V2.5 follows a nonetheless ongoing controversy around HyperWrite’s Reflection 70B, which co-founder and CEO Matt Shumer claimed on September 5 was the "the world’s prime open-supply AI mannequin," in keeping with his inside benchmarks, solely to see those claims challenged by independent researchers and the wider AI analysis community, who have to this point failed to reproduce the acknowledged results.

As such, there already appears to be a brand new open supply AI model chief just days after the last one was claimed. This new release, issued September 6, 2024, combines each basic language processing and coding functionalities into one highly effective mannequin. A Chinese lab has created what seems to be one of the most highly effective "open" AI fashions so far. By making DeepSeek-V2.5 open-supply, Deepseek free-AI continues to advance the accessibility and potential of AI, cementing its role as a frontrunner in the sphere of massive-scale models. This new model enhances both normal language capabilities and coding functionalities, making it great for various applications. This compression allows for more efficient use of computing resources, making the mannequin not only powerful but additionally highly economical by way of useful resource consumption. Q: Is DeepSeek AI free to use? Regardless of the case, it's always advisable to be thoughtful and conscious when utilizing any free software. These GPUs are interconnected using a combination of NVLink and NVSwitch applied sciences, making certain environment friendly knowledge transfer inside nodes. AI engineers and information scientists can construct on DeepSeek-V2.5, creating specialised models for niche purposes, or further optimizing its performance in particular domains.

As such, there already appears to be a brand new open supply AI model chief just days after the last one was claimed. This new release, issued September 6, 2024, combines each basic language processing and coding functionalities into one highly effective mannequin. A Chinese lab has created what seems to be one of the most highly effective "open" AI fashions so far. By making DeepSeek-V2.5 open-supply, Deepseek free-AI continues to advance the accessibility and potential of AI, cementing its role as a frontrunner in the sphere of massive-scale models. This new model enhances both normal language capabilities and coding functionalities, making it great for various applications. This compression allows for more efficient use of computing resources, making the mannequin not only powerful but additionally highly economical by way of useful resource consumption. Q: Is DeepSeek AI free to use? Regardless of the case, it's always advisable to be thoughtful and conscious when utilizing any free software. These GPUs are interconnected using a combination of NVLink and NVSwitch applied sciences, making certain environment friendly knowledge transfer inside nodes. AI engineers and information scientists can construct on DeepSeek-V2.5, creating specialised models for niche purposes, or further optimizing its performance in particular domains.

DeepSeek 2.5 is a pleasant addition to an already impressive catalog of AI code generation models. Performance Metrics: Outperforms its predecessors in a number of benchmarks, corresponding to AlpacaEval and HumanEval, showcasing enhancements in instruction following and code era. This characteristic broadens its functions across fields equivalent to actual-time weather reporting, translation companies, and computational tasks like writing algorithms or code snippets. As per the Hugging Face announcement, the model is designed to raised align with human preferences and has undergone optimization in multiple areas, including writing high quality and instruction adherence. DeepSeek-V2.5 has been wonderful-tuned to fulfill human preferences and has undergone various optimizations, together with enhancements in writing and instruction. With an emphasis on better alignment with human preferences, it has undergone numerous refinements to ensure it outperforms its predecessors in practically all benchmarks. The desk below highlights its efficiency benchmarks. AI observer Shin Megami Boson, a staunch critic of HyperWrite CEO Matt Shumer (whom he accused of fraud over the irreproducible benchmarks Shumer shared for Reflection 70B), posted a message on X stating he’d run a private benchmark imitating the Graduate-Level Google-Proof Q&A Benchmark (GPQA). While the everyday AI is skilled with supercomputers with over 16,000 chips, DeepSeek engineers needed only 2000 NVIDIA chips.

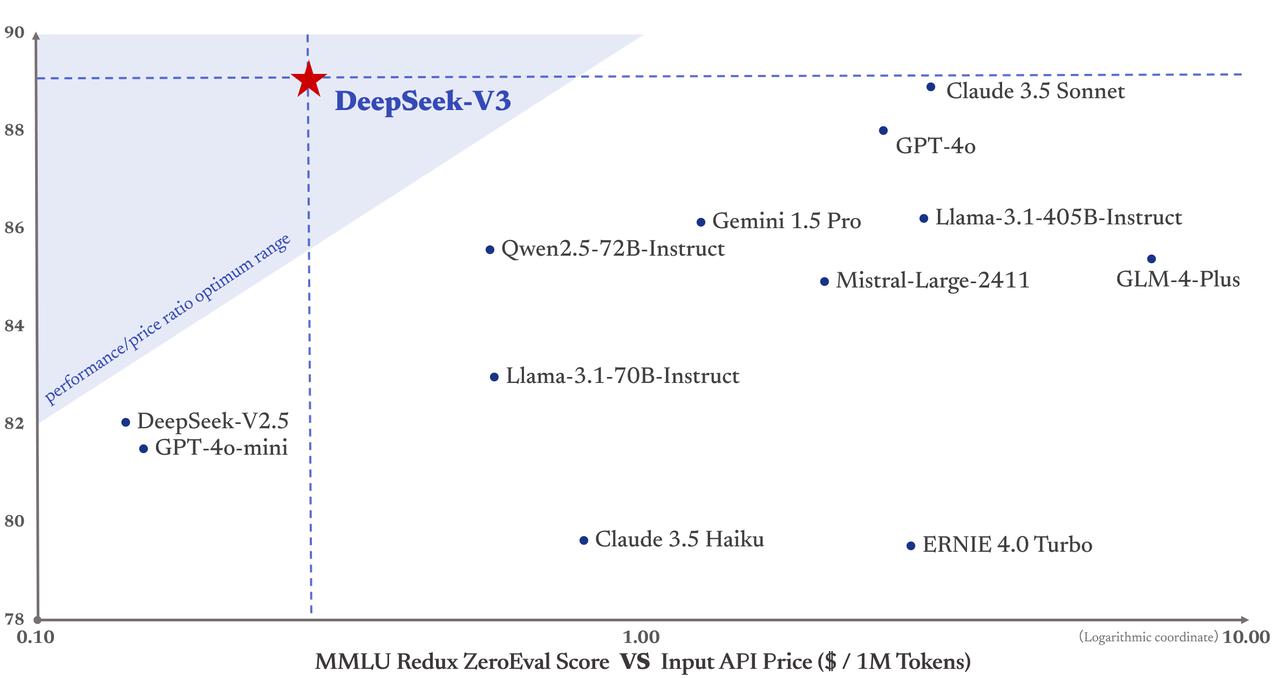

Nigel Powell is an writer, columnist, and consultant with over 30 years of experience in the expertise business. DeepSeek unveiled its first set of models - DeepSeek Coder, DeepSeek LLM, and DeepSeek Chat - in November 2023. However it wasn’t till last spring, when the startup released its next-gen DeepSeek-V2 family of models, that the AI trade began to take notice. The integration of previous fashions into this unified version not only enhances performance but also aligns more effectively with consumer preferences than earlier iterations or competing fashions like GPT-4o and Claude 3.5 Sonnet. In line with him DeepSeek-V2.5 outperformed Meta’s Llama 3-70B Instruct and Llama 3.1-405B Instruct, however clocked in at under efficiency compared to OpenAI’s GPT-4o mini, Claude 3.5 Sonnet, and OpenAI’s GPT-4o. The DeepSeek models, usually neglected compared to GPT-4o and Claude 3.5 Sonnet, have gained first rate momentum up to now few months. On this blog, we talk about DeepSeek 2.5 and all its features, the corporate behind it, and examine it with GPT-4o and Claude 3.5 Sonnet. This table signifies that DeepSeek 2.5’s pricing is way more comparable to GPT-4o mini, however in terms of effectivity, it’s closer to the usual GPT-4o. By way of language alignment, DeepSeek-V2.5 outperformed GPT-4o mini and ChatGPT-4o-latest in inside Chinese evaluations.

댓글목록

등록된 댓글이 없습니다.